Machine learning model partitioning for multi-tenant data using Python

Background

Recently I have faced an interesting question. How do you train and deploy ML models in a multi-tenant environment (same software used by many different companies) where the same model needs to be different per company?

Lets say your operational research company has written some optimisation software. Your software allows other companies to optimise their teams productivity. Team managers open your software and input number of employees, employee speciality and team’s overall output on the given days. As soon as enough samples are provided your software allows team managers to model the number of employees per discipline and what impact it has on the team output.

We will assume that company A will enter different team data to company B. As a result we do not want company A predictions to interfere with company B and vice versa. To solve this, two solutions come immediately to mind, you can either create one joint ML model for all tenants, or a separate (partitioned) ML model per tenant.

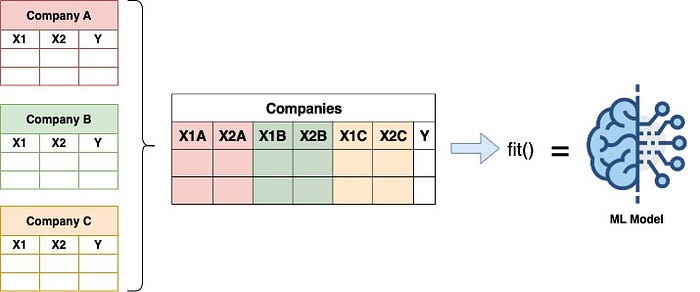

Joint ML Model

For a joint ML model, depending on what ML algorithm you will choose, chances are you would need to do some feature engineering to ensure different tenants are not impacting each other’s predictions. One of the ways to do it would be to create features per tenant (column partitioning). For example instead of having columns [X1, X2, X3, Y], you might have columns with postfix per company [X1A, X2A, X3A, X1B, X2B, X3B, Y].

Overall joint model comes with many problems especially if column partitioning is used:

- Big batch training, as all companies’ data is trained together.

- Column partitioning data transformations add additional complexity.

- It is not easy to see how a model is performing per tenant during training.

- To get a prediction you either need to send [X1A, X2A, X3A, X1B, X2B, X3B] to get the Y or need to transform [X1, X2, X3] to [X1A, X2A, X3A, X1B, X2B, X3B] before prediction can be obtained.

Due to the reasons above I do not recommend this approach.

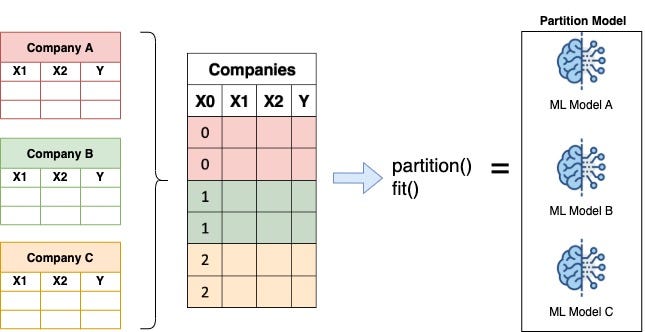

Partitioned ML Model

This approach creates ML model partitions (submodels) based on the partition key that is provided in the X input and each submodel is trained separately. Let’s jump into an example to see how this works in practice.

First I will need to generate some test data, for this I will use linear function y=mx+b where m is the tenant specific bias and b is the error. Same data will be generated for tenants a, b and c. Bias will be created per tenant and the same error will be used for all tenants. Tenants a, b and c will be represented as numbers 0, 1 and 2. Finally data for companies a, b and c will be merged together to generate one Xy array and this array will be shuffled (in the real world data might not be in order).

import numpy as np

import random

samples = 1000

x = np.random.randint(100000, size=(samples, 1))

bias = np.random.randint(1000, size=3)

error = np.random.normal(loc=0, scale=2, size=(samples, 1))

Xy_a = np.hstack([np.full((samples,1), 0), x, bias[0]*x+error])

Xy_b = np.hstack([np.full((samples,1), 1), x, bias[1]*x+error])

Xy_c = np.hstack([np.full((samples,1), 2), x, bias[2]*x+error])

Xy = np.concatenate((Xy_a, Xy_b, Xy_c), axis=0)

random.shuffle(Xy)

X = Xy[:,0:2]

y = Xy[:,2]Now that we have our test data set up, we will use Partition and LinearRegression together to find parameters that will fit the generated linear function data.

from sklearn.linear_model import LinearRegression

import pickle

import random

import numpy as np

idx_to_test = np.random.randint(low=0, high=len(X), size=5).tolist()

partitioned_linear_regression = Partition(LinearRegression, normalize=True, copy_X=True)

partitioned_linear_regression.fit(X, y)

score_per_partition = partitioned_linear_regression.score(X, y)

print("Coefficient of determination per partition:", score_per_partition)

y_hat = partitioned_linear_regression.predict(X[idx_to_test])

print("y actual:", y[idx_to_test])

print("ŷ predicted:", y_hat)"""

Output:

Coefficient of determination per partition:

[[0.0, 0.9999999999420465],[1.0, 0.9999999999999939], [2.0, 0.9999999999999867]]y actual:

[852859.74644801, 798378.18659468, 64008621.49441419, 67190706.48394649, 247394.9859037]ŷ predicted:

[852858.0062641214, 798380.9980079387, 64008623.800978765, 67190703.79177949, 247391.91450360045]

"""

After your data is trained you can save your model and use it in the future to make predictions. This can be done by using pickle.

filename = 'partition.pkl'

pickle.dump(partitioned_linear_regression, open(filename, 'wb'))

partitioned_linear_regression_from_pkl = pickle.load(open(filename, 'rb'))

y_hat_from_pkl = partitioned_linear_regression_from_pkl.predict(X[idx_to_test])

print("y actual:", y[idx_to_test])

print("ŷ predicted (using pkl):", y_hat_from_pkl)"""

Output:

y actual:

[852859.74644801, 798378.18659468, 64008621.49441419, 67190706.48394649, 247394.9859037]ŷ predicted (using pkl):

[852858.0062641214, 798380.9980079387, 64008623.800978765, 67190703.79177949, 247391.91450360045]

"""

I did not have a chance to test this with MLflow, however I do not see why the Partition model would not be compatible with MLflow. If you get a chance to test it please do leave a comment.

Conclusion

Partition model makes it easy to create submodels based on some data segregation key. Sometimes data segregation is natural and obvious like we have seen with the above multitenancy example. Other times data can be partitioned through stratification on some feature in your data. With feature stratification ML models can be trained on the subset of the data and not the whole to deliver better predictions in certain scenarios. It is also possible to combine these “natural” partitions such as multitenancy together with feature stratification to create composite partitions with the Partition model.

Note: Code in this article should not be used without thorough testing. If you have suggestions or feedback please do comment.